“Synapse SQL Tutorial: Mastering Data Analytics with Synapse SQL

Introduction to Synapse SQL:

A cloud-based data integration service called Azure Synapse offers big data integration with a smooth experience for data warehousing and big data analytics. You can create and maintain data pipelines with ease, as well as use Azure Synapse Analytics to analyse data, thanks to this fully-managed service that combines SQL, Spark, and data integration into one service (SQL DW). Enterprise data warehouse Azure Synapse Analytics (formerly SQL Data Warehouse) runs complicated queries across petabytes of data efficiently because to massively parallel processing (MPP). Data warehousing and big data analytics are fully controlled and integrated thanks to its integration with Azure Machine Learning, Power BI, Azure Stream Analytics, and Azure Data Factory.

This article discusses the Synapse SQL architecture components. It also discusses how Azure Synapse SQL blends distributed query processing with Azure Storage to deliver excellent performance and scalability.

- Azure Synapse SQL architecture

- Serverless SQL pool

- dedicated SQL pool

- Transact-SQL

- Synapse SQL resource

- Capacity limits for dedicated SQL pool

- Cost management for serverless SQL pool

1.Explain Azure Synapse SQL architecture ?

Azure Synapse SQL is a fully managed data integration and analytics service that brings together enterprise data warehousing, big data integration and integration, and analytics in a single service. It offers a unified experience for data integration, data warehousing, and big data analytics, and allows you to access data stored in Azure Synapse SQL using your choice language and environment.

Azure Synapse SQL’s fundamental architecture contains the following components: 1. SQL Server Engine: This is the core engine that provides the relational database capabilities for Azure Synapse SQL. It is based on Microsoft’s SQL Server technology and provides a range of features such as data security, scalability, and a rich set of built-in functions.

- Data Warehouse Compute: This is a separate compute layer that is optimized for data warehouse workloads. It provides an MPP architecture that allows for scaling up to thousands of cores, and provides features such as columnar storage and massively parallel processing (MPP).

- Data Lake Compute: This is an additional compute layer, optimized for data lake workloads. It provides a wide range of features such as interactive analytics, machine learning, and data exploration.

- Data Integration: This component provides the integration capabilities needed to move data from various sources into Azure Synapse SQL. It includes services such as Azure Data Factory and Azure Data Lake Store.

- Management: This component provides the tools needed to manage the Azure Synapse SQL environment. It includes features such as monitoring, backup and restore, scalability, and security.

2.Describe Synapse SQL architecture components ?

Synapse SQL is a fully managed, cloud-based data warehouse service that enables SQL data analysis and integrates with Azure Machine Learning. Synapse SQL’s major components are:

SQL pool: This is Synapse SQL’s main component, where you can perform T-SQL queries and develop data models utilising SQL Server integration services (SSIS).

SQL on-demand is a new Synapse SQL feature that lets you to run T-SQL queries on data stored in Azure Data Lake Storage Gen2 or Azure Blob Storage without first loading the data into a SQL pool.

SQL serverless: This is a new Synapse SQL feature that allows you to run T-SQL queries on data stored in Azure Data Lake Storage Gen2 or Azure Blob Storage without the need to create a SQL pool.

SQL serverless on-demand: This is a new Synapse SQL feature that combines the advantages of SQL on-demand and SQL serverless, allowing you to run T-SQL queries on data stored in Azure Data Lake Storage Gen2 or Azure Blob Storage without the need to provision a SQL pool and only pay for the resources used during query execution.

Integration with Azure Machine Learning: To design, train, and deploy machine learning models on your data, Synapse SQL may be integrated with Azure Machine Learning.

Data integration: Synapse SQL integrates with a variety of Azure and third-party data sources, allowing you to ingest and manipulate data for analysis.

3.Explain about Azure Storage in Synapse SQL?

Azure Synapse SQL is a cloud-based data integration, analytics, and storage platform that provides a unified experience for big data and data warehousing. It enables data analysis with Azure SQL, Spark, and Databricks and offers a range of storage options, including Azure Storage.

Azure Storage is a cloud storage service offered by Microsoft that offers secure, long-term, and scalable object storage. It is intended to store and manage huge volumes of data in the cloud, and it may be used to store data for a variety of reasons, such as the storage backend for Azure Synapse SQL.

You can use Azure Synapse SQL and Azure Storage in tandem to analyse and store huge volumes of data in the cloud, leveraging Azure’s scalability, security, and durability to fulfil your data storage and processing requirements.

4.Explain about Control node in Synapse SQL ?

The control node in Synapse SQL is the SQL pool’s centre node that organises query execution and oversees data flow across compute nodes. It takes queries from client applications, parses and optimises them, and then distributes the query execution plan across compute nodes. The control node also checks the state of the compute nodes and oversees the SQL pool’s overall health. It is in charge of preserving metadata about database items as well as enabling access to data stored in the pool.

5.Explain about compute node ?

Compute nodes are the infrastructure that runs your SQL queries and other data integration activities in Azure Synapse. They are used to parallelize and divide your workload across several processors, enhancing query and data integration task performance and scalability.

In Azure Synapse, there are two types of compute nodes: SQL on-demand and SQL pool.

SQL on-demand is a fully managed, auto-scaling computing environment that allows you to conduct T-SQL queries and stored procedures on Azure Synapse workspace data. It is intended to enable interactive queries as well as batch processing, and it scales dynamically in response to your workload.

SQL pool is a dedicated computing environment that allows you to conduct T-SQL queries and stored procedures on Azure Synapse workspace data. It is intended to support high-concurrency applications and allows you to choose the number and size of compute nodes.

You can select the type of compute node based on your workload needs as well as the scale and performance requirements of your applications.

6.Describe Data movement service in Synapse SQL ?

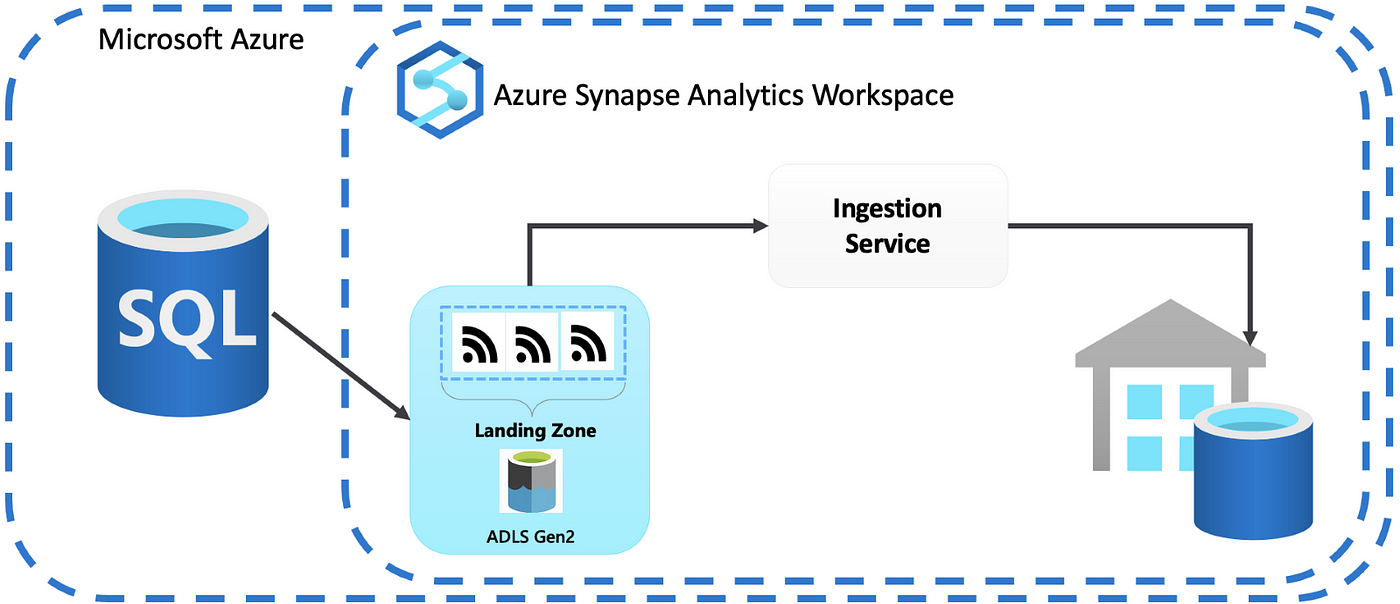

Data movement service is a function in Azure Synapse that allows you to copy data between different data stores and file systems. Data movement service allows you to transfer data between Azure Synapse (previously SQL Data Warehouse), Azure Blob Storage, Azure Data Lake Storage, and on-premises file systems.

Data movement service can be used to make one-time data transfers or to set up ongoing data replication. The data movement function can be used to transport data into Azure Synapse for analysis or out of Azure Synapse for archival or backup purposes.

Under the hood, the data movement service leverages Azure Data Factory to conduct data copies. In addition, the data movement service has a user interface in the Azure Synapse workspace that makes it simple to set up and monitor data transfer jobs.

7.Explain about distribution in Synapse SQL ?

Distribution in Azure Synapse is the process of spreading rows among different database servers depending on a distribution column. This can help improve query performance by allowing them to be executed in parallel across numerous servers.

When you build a table with Synapse SQL, you may specify a distribution column. The distribution column cannot be an identity or rowguid column and must be of a compatible data type. The distribution column specifies how the table’s rows are divided across the database servers.

Assume you have a huge table with many rows and wish to distribute the rows over multiple servers to increase query performance. You may build a table with a distribution column such as “region” or “customer id”, and then specify the distribution type as “hash” or “round-robin”. The rows of the table would be distributed across the servers based on the values in the distribution column.

When constructing a table in Synapse SQL, it is critical to carefully evaluate the distribution column and distribution type, as they can have a major impact on the performance of queries against the table.

8.Explain Hash-distributed tables ?

The rows of a hash-distributed table are dispersed among one or more storage nodes in a cluster based on the value of a hash key. The hash key is determined by the values in one or more table columns. The hash key determines how rows are distributed across storage nodes, and the same hash key always translates to the same storage node.

One of the fundamental advantages of hash-distributed tables is that they allow for quick access to data because the storage node that has a specific row can be determined using the hash key. This is beneficial in circumstances when you need to access specific rows of a table frequently, because you can use the hash key to rapidly discover the node that contains the relevant row.

Hash-distributed tables can also be used to distribute data uniformly throughout a cluster, which can be valuable for balancing workloads and boosting cluster performance.

It is vital to highlight that hash-distributed tables are not ideal for cases in which range queries or data sorting based on column values are required. This is due to the fact that the rows of a hash-distributed table are not kept in any specific order and have no set range.

9.Describe about Round-robin distributed tables ?

Round-robin distributed tables are a Synapse SQL (previously SQL Server on Azure) feature that lets you to uniformly spread data in a table among a collection of database replicas. This can be beneficial for query performance optimization because it allows you to scale out the database by dividing data and queries across numerous replicas.

To create a round-robin distributed table, you use the DISTRIBUTION = ROUND_ROBIN option in the CREATE TABLE statement. For example:

CREATE TABLE my_table (

id INT PRIMARY KEY,

value INT

) DISTRIBUTION = ROUND_ROBIN;

When you insert data into a round-robin distributed table, the data is spread evenly across the database copies automatically. When you execute queries against the table, they will be automatically parallelized among the replicas, enhancing query performance.

Keep in mind that round-robin distributed tables are best suited for situations where the data is generally read-only and the primary issue is query efficiency. Round-robin distribution may not be the best option if you need to execute frequent changes or deletes on the table.

- Explain about Replicated tables ?

A replicated table in Synapse SQL is one that is automatically kept in sync with another table in the same or a different database. When data in the original table is inserted, changed, or removed, the changes are automatically propagated to the duplicated table. Because they allow data to be stored and accessed in several locations, replicated tables are frequently employed to improve query performance and scalability. They can also be used to increase data availability, as duplicated tables can keep data available even if the original table goes down.

11.How to create Serverless SQL pool in Azure Synapse Analytics ?

Follow these steps to create a serverless SQL pool in Azure Synapse Analytics:

Access the Azure portal.

In the top-left corner, click the “Create a resource” button.

Enter “Azure Synapse Analytics” in the “Search the Marketplace” area and click Enter.

Select “Azure Synapse Analytics (previously SQL DW)”.

Select the “Create” option.

Choose a subscription, a resource group, and a location for your Azure Synapse Analytics workspace.

Select “Create new” from the “Serverless SQL pool” menu.

Choose a pricing tier and give your serverless SQL pool a name.

To review your choices, click the “Review + create” option.

If everything appears to be in order, click the “Create” button to start creating your serverless SQL pool.

The serverless SQL pool may take a few minutes to create. When it is complete, you can connect to it and begin performing queries.

12.What are the benefits of Serverless SQL pool ?

A serverless SQL pool is a database service that lets you run T-SQL queries in a serverless environment. The following are some advantages of using a serverless SQL pool:

Low cost: Because you only pay for the queries that you run, a serverless SQL pool might be less expensive than a standard SQL server.

Scalability: A serverless SQL pool may automatically scale up or down dependent on workload, lowering the cost of hosting a database.

Management is simplified: With a serverless SQL pool, you don’t have to bother about managing the underlying infrastructure. This can assist reduce the time and effort required to keep a database up to date.

High availability: Serverless SQL pools are built to be highly available and can failover automatically in the case of a failure.

Compatibility: Because serverless SQL pools are compatible with the majority of the T-SQL language, migrating your existing SQL code to a serverless environment is simple.

13.How to start using serverless SQL pool ?

You may begin utilising SQL Serverless with Azure SQL Database by following these steps:

Create a new Azure SQL database on the Azure portal.

In the “Compute + Storage” tab, select the “Serverless” option to activate the serverless option for the database.

Set the “Max vCores” and “Storage limit (GB)” options to set the database’s maximum resource consumption.

Set the “Min vCores” and “Pause delay (minutes)” options to the minimum number of cores and the amount of time the database should be idle before being suspended.

To save the changes, press the “Apply” button.

After you’ve established the database, you can connect to it and begin using it like any other Azure SQL database. To connect to the database and run queries, utilise tools such as SQL Server Management Studio (SSMS) or Azure Data Studio.

It is important to note that with the serverless option, the database will scale up and down automatically dependent on the workload, and you will only be charged for the resources that you use. This can help you save money on Azure SQL Database charges, especially if you have intermittent or irregular workloads.

14.Explain the use of Client tools in Azure SQL Database serverless?

Azure SQL Database serverless is a low-cost, high-availability database service that scales computing and storage resources based on workload need. Client tools are available for connecting to, managing, and querying Azure SQL Database serverless instances.

Client tools that are commonly used with Azure SQL Database serverless include:

SQL Server Management Studio (SSMS): This is a popular graphical interface for administering and querying serverless Azure SQL Database instances.

Azure Data Studio: A cross-platform database administration tool for connecting to and querying Azure SQL Database serverless instances.

sqlcmd: A command-line programme for executing T-SQL commands against Azure SQL Database serverless instances.

Azure CLI: This is a command-line interface for managing Azure resources, such as Azure SQL Database serverless instances.

The Azure portal is a web-based management interface for Azure resources, including Azure SQL Database serverless instances.

In addition to these tools, you may connect to and interact with Azure SQL Database serverless instances using a variety of computer languages and frameworks. For example, with the right database drivers and libraries, you may connect to and query Azure SQL Database serverless in languages such as Python, Java, and C#.

15.Describe about T-SQL support ?

Serverless compute in Azure SQL Database is a compute tier that provides on-demand and automatically scaled compute based on your workload requirements. It automatically pauses when not in use and resumes when required. It is intended to provide a low-cost database service that automatically scales compute resources in response to workload needs.

In terms of T-SQL support, Azure SQL Database Serverless provides the same syntax and functionalities as the other Azure SQL Database service tiers. This contains, among other things, support for stored procedures, triggers, functions, views, and transactions. T-SQL allows you to query, insert, update, and remove data in your Azure SQL Database Serverless instance, as well as build and edit database objects including tables, indexes, and views.

Because Azure SQL Database Serverless is a fully-managed service, you don’t have to bother about managing or maintaining the underlying infrastructure. Simply focus on building and running your T-SQL queries and stored procedures, and Azure SQL Database Serverless will scale and maintain the database infrastructure for you.

16.What security measures are taken to protect a serverless SQL pool in Azure synapse analytics ?

To protect a serverless SQL pool, many security precautions are implemented in Azure Synapse Analytics:

Network isolation: A serverless SQL pool is segregated from other Azure services on a private network. You can further restrict access to the pool by implementing virtual network rules.

Data encryption at rest: Data stored in a serverless SQL pool is encrypted at rest using Azure Storage Service Encryption.

Encryption in transit: Data transported to and from a serverless SQL pool is encrypted in transit using Transport Layer Security (TLS).

Access to a serverless SQL pool is regulated using Azure Active Directory (AAD) authentication. You can also utilise Azure Private Link to connect to the pool safely over a private network connection.

Authorization: Using Azure RBAC and column-level security, you may restrict access to tables, views, and stored procedures within a serverless SQL pool.

Auditing: Azure Monitor can be used to track and audit access to a serverless SQL pool.

Azure Synapse Analytics detects and prevents potential risks to a serverless SQL pool by utilising Azure Advanced Threat Protection.

It is also critical to adhere to recommended practises for safeguarding your serverless SQL pool, such as using strong passwords, enabling multi-factor authentication, and patching and updating your Azure resources on a regular basis.

17 .How does Azure SQL serverless support T-SQL ?

Azure SQL Serverless is a computing tier for Azure SQL Database that enables pay-per-request scaling of compute and storage resources. It is intended to automatically modify a database’s compute and storage capabilities based on workload demand and resource consumption. You can perform Transact-SQL (T-SQL) queries and stored procedures in a fully managed database with Azure SQL Serverless without having to provision or manage any infrastructure.

T-SQL language characteristics supported by Azure SQL Serverless include:

- Data definition language (DDL) statements: CREATE, ALTER, DROP, and TRUNCATE

- Data manipulation language (DML) statements: SELECT, INSERT, UPDATE, DELETE, and MERGE

- Data control language (DCL) statements: GRANT, REVOKE, and DENY

- Transaction control statements: BEGIN TRANSACTION, COMMIT, and ROLLBACK

- Dynamic management views and functions

- Stored procedures, triggers, and functions

- Cursor and error handling

Azure SQL Serverless is meant to be compatible with the majority of T-SQL applications, so you may use it to perform existing T-SQL queries and stored procedures without changing your code. When utilising Azure SQL Serverless, there are a few constraints to be aware of:

A saved procedure or trigger can now be up to 50 MB in size.

Currently, the maximum number of tables in a query is 100.

Currently, the maximum number of columns in a table is 1,000.

18.Is it possible to access local storage in serverless SQL pool ?

A SQL pool (formerly known as a “SQL data warehouse”) in Azure is a fully managed, petabyte-scale cloud data platform that allows you to conduct complicated queries on data stored in Azure Storage, Azure Cosmos DB, and Azure Data Lake Storage. It does not have access to the system where it is running’s local storage.

If you want to access local storage from a serverless function running in Azure, you could consider using Azure Functions. Azure Functions allows you to run custom logic in the cloud in a serverless environment, and you can specify that the function has access to local storage by setting the WEBSITE_LOCAL_CACHE_OPTION app setting to Always. However, be aware that the local cache is not persisted across instances of the function, so you should only use it for storing temporary data that can be regenerated if necessary.

- What are the extensions in Serverless SQL pool ?

A serverless SQL pool is an Azure SQL Database feature that enables you to run Transact-SQL queries in a serverless environment. It is intended to make it easier to create and run small to medium-sized database-required applications.

Extensions are additional bits of functionality that can be added to a database in Azure SQL Database. There are various types of extensions available, such as:

Data masking: aids in the protection of sensitive data by masking it in the database.

Advanced threat protection: aids in the detection and mitigation of potential database security threats.

Columnstore: allows for extremely compressed and rapid query processing in data warehouse workloads.

Machine learning: allows for the application of machine learning models within a database.

You can add this type of functionality to a serverless SQL pool in Azure SQL Database by using extensions. To utilise an extension, first build a database in Azure SQL Database and then add the extension to the database using the Azure portal, Azure PowerShell, or the Azure CLI.

20.Explain OPENROWSET function ?

The OPENROWSET function in SQL Server allows you to read data from a variety of data sources, including Excel files. It can import data into a SQL Server database table or retrieve data from a table for use in a SQL query.

Here’s an example of how the OPENROWSET function could be used to import data from an Excel file into a SQL Server table:

SELECT *

INTO dbo.ImportedData

FROM OPENROWSET(‘Microsoft.ACE.OLEDB.12.0’,

‘Excel 12.0;Database=C:\Data\MyExcelFile.xlsx;’,

‘SELECT * FROM [Sheet1$]’);

This example creates a table called ImportedData in the dbo schema and then imports all of the data from the first sheet of the Excel file MyExcelFile.xlsx into the table.

The OPENROWSET function can also read data from a variety of other data sources, including text files, CSV files, and databases. It is a sophisticated and adaptable method for importing and manipulating data in SQL Server.

21.How to Query multiple files or folders in Serverless SQL pool ?

You can specify several files or folders as the source of data for your query in Azure SQL Data Warehouse by using the FROM clause in your SELECT statement.

An example of accessing data from numerous CSV files saved in an Azure Storage Account is shown below:

SELECT *

FROM

(

SELECT * FROM OPENROWSET(

BULK ‘https://mystorageaccount.blob.core.windows.net/mycontainer/data1.csv’,

FORMAT=’CSV’

)

) AS T1

UNION ALL

SELECT *

FROM

(

SELECT * FROM OPENROWSET(

BULK ‘https://mystorageaccount.blob.core.windows.net/mycontainer/data2.csv’,

FORMAT=’CSV’

)

) AS T2

A wildcard can also be used to search for all files in a folder:

SELECT *

FROM

(

SELECT * FROM OPENROWSET(

BULK ‘https://mystorageaccount.blob.core.windows.net/mycontainer/*.csv’,

FORMAT=’CSV’

)

) AS T

It is important to note that you must have the proper rights to access the storage account and the files.

22.How to query PARQUET file format in Serverless SQL pool ?

You can take the following methods to query a PARQUET file saved in Azure Storage from a Serverless SQL pool in Azure Synapse:

Make an external data source that points to your Azure Storage account and the PARQUET file you want to query. This can be accomplished with the T-SQL query CREATE EXTERNAL DATA SOURCE.

Make an external table that references the PARQUET file and the external data source. This can be accomplished with the T-SQL statement CREATE EXTERNAL TABLE.

Query the external table in the same way you would a regular table.

Here is an example of a T-SQL statement that you could use:

— Replace <storage_account_name> and <storage_account_key> with your Azure Storage account name and key

CREATE EXTERNAL DATA SOURCE MyAzureStorage

WITH (

TYPE = HADOOP,

LOCATION = ‘wasbs://<container_name>@<storage_account_name>.blob.core.windows.net/’,

CREDENTIAL = AzureStorageCredential

);

— Replace <file_name> with the name of your PARQUET file

CREATE EXTERNAL TABLE dbo.MyParquetTable (

<column_name_1> <data_type_1>,

<column_name_2> <data_type_2>,

…

) WITH (

DATA_SOURCE = MyAzureStorage,

LOCATION = ‘/<folder_name>/<file_name>.parquet’,

FILE_FORMAT = ParquetFileFormat

);

— Now you can query the external table like a regular table

SELECT * FROM dbo.MyParquetTable;

23.How to Query Delta Lake files using serverless SQL pool in Azure Synapse Analytics?

To query Delta Lake files in Azure Synapse Analytics using a serverless SQL pool, follow these steps:

In the Azure portal, navigate to the Azure Synapse Analytics workspace.

Click on the “SQL Pools” tab in the left-hand menu, then on the name of the serverless SQL pool you want to utilise.

To open a new query editor window, click the “New Query” button.

You can create a SQL query in the query editor to select data from the Delta Lake files. To indicate the location of the Delta Lake files and the PARQUET format, the query should use the OPENROWSET function.

Here’s an example query that pulls data from a Delta Lake table placed in a subdirectory called “delta” in the workspace’s default storage container:

SELECT * FROM OPENROWSET(

BULK ‘wasbs://delta@<storage-account-name>.blob.core.windows.net/’,

FORMAT=’PARQUET’

) AS DeltaTable;

When you’re through writing the query, click the “Run” button to run it and see the results in the results pane.

24.How to query CSV files in serverless SQL pool ?

To query a CSV file in a serverless SQL pool, use Azure Synapse Analytics’ CREATE EXTERNAL TABLE command (formerly SQL DW).

Here’s an example of how to accomplish it:

CREATE EXTERNAL TABLE [dbo].[MyTable] (

[Column1] VARCHAR(50),

[Column2] INT,

[Column3] DECIMAL(10,2)

)

WITH (

DATA_SOURCE = [MyDataSource],

FILE_FORMAT = [MyFileFormat],

REJECT_TYPE = VALUE,

REJECT_VALUE = 0

)

This adds an external table MyTable to the dbo schema, with three columns of the data types VARCHAR, INT, and DECIMAL. The WITH clause provides the external data source, file format, reject type and value, and reject value.

The CSV file can then be queried using regular T-SQL commands. As an example:

SELECT * FROM [dbo].[MyTable]

This will select all rows and columns from the CSV file.

25.How to Query Azure Cosmos DB data with a serverless SQL pool in Azure Synapse Link ?

To query Azure Cosmos DB data using a serverless SQL pool in Azure Synapse Link, follow these steps:

Use SQL Server Management Studio (SSMS) or Azure Data Studio to connect to your Azure Synapse workspace.

If you don’t already have one, create one in your Azure Synapse workspace.

In your serverless SQL pool, create an external table that corresponds to the data in your Azure Cosmos DB container. You can accomplish this with the following T-SQL statement:

CREATE EXTERNAL TABLE [dbo].[cosmos_table_name] (

[column_name_1] data_type,

[column_name_2] data_type,

…

)

WITH (

DATA_SOURCE = [CosmosDB],

LOCATION = ‘/path/to/container’,

QUERY = ‘select * from c’

)

After you’ve built the external table, you may query the data in your Azure Cosmos DB container using regular T-SQL commands. As an example:

SELECT * FROM [dbo].[cosmos_table_name] WHERE [column_name_1] = ‘value’

26.How to read the chosen subset of columns ?

In serverless SQL, you can use the SELECT command to define which columns to read from a table. As an example:

SELECT column1, column3

FROM mytable

This will select only the column1 and column3 columns from the mytable table.

You can also use the * wildcard to select all columns, like this:

SELECT *

FROM mytable

This will select all columns from the mytable table.

27.Explain about Schema inference ?

Schema inference is a feature in Azure SQL Data Warehouse, SQL pool, and SQL on-demand that allows you to create tables and load data into them by uploading files to Azure Blob Storage or Azure Data Lake Storage, or by specifying a data source, such as a SQL Server database or a Data Factory pipeline.

When you use schema inference to build a table, the service analyses the data in the source file or data source and provides an automatically generated schema for the table based on the data types and structure of the data. This allows you to load data into the table quickly and efficiently without having to manually construct the schema.

There are several advantages of employing schema inference:

It helps you save time and effort: You don’t have to spend time manually specifying your table’s schema.

It is adaptable: You don’t have to worry about supplying the correct data types or structure because the schema is produced automatically based on the data.

It’s simple to use: With a few mouse clicks or API calls, you may construct a table and load data into it.

Using schema inference has some restrictions that should be taken into account:

The schema is created based on the data in the source file or data source, therefore it might not be accurate if the data is inaccurate or incomplete.

Based on the initial set of data placed into the table, the schema is created. The schema might not be updated to reflect changes if the data structure changes in subsequent batches.

When utilising schema inference, you cannot define specific custom data types or constraints. Only the data types and constraints that are generated automatically based on the data may be used.

Overall, the ability to load data into Azure SQL Data Warehouse, SQL pool, and SQL on-demand without having to explicitly create the schema is a beneficial feature provided by schema inference. To make sure the generated schema is accurate and appropriate for your needs, you should carefully evaluate it and be aware of the feature’s limits.

28.Explain Filename function ?

A serverless SQL pool is a type of performance tier in Azure Synapse Analytics (formerly SQL Data Warehouse) that enables you to elastically scale computing and storage resources separately, pay-per-query, and without the need to manage any infrastructure.

The file name of a file is represented as a string using the T-SQL Filename function. A SELECT, INSERT, UPDATE, or DELETE statement can use this function.

The syntax for the Filename function is as follows:

FILENAME ( ‘file_name’, ‘file_extension’ )

The parameters ‘file _name’ and ‘file _extension’ are strings that, respectively, represent the file’s name and extension.

Here is an illustration of how to utilize the Filename function:

SELECT * FROM my_table

WHERE file_column = Filename(‘my_file’, ‘txt’)

This example would select every row from the “my table” table containing the string “my _file.txt” in the “file column” column.

29.Explain Filepath function ?

The FilePath method operates the same manner in Azure SQL Database serverless as it does in standard SQL Server. Given a file’s file ID, it returns the file’s path.

To obtain the file path of a file stored in a database, such as an image or a document, use the FilePath function in a SELECT statement. When you need to retrieve a file from a database but are unsure of where it is physically located on the server, this is helpful.

The syntax for the FilePath function is as follows:

FILEPATH(file_id)

The file_id parameter is the ID of the file for which you want to get the file path.

You can use the FilePath function to determine the file path of a file with a specific file ID, for instance, if you have a table called “Files” that contains a column named “FileID” that records the file IDs of various files:

SELECT FILEPATH(FileID) FROM Files WHERE FileID = 123;

The “Files” table’s “files” table will then yield the file path for the file with ID 123.

The FilePath function can be used in the same manner and with the same functionality in Azure SQL Database serverless as it can in conventional SQL Server. However, there are some variations in the implementation and management of Azure SQL Database serverless, which could occasionally have an impact on how the function functions. For instance, depending on demand, Azure SQL Database serverless scales the amount of compute resources up or down, which occasionally has an impact on the performance of the FilePath function.

30.How to Work with complex types and nested or repeated data structures ?

In Azure Synapse (previously SQL Data Warehouse), you may represent nested or repeating data structures using complicated data types such as ARRAY, MAP, and STRUCT.

To make a table with a complex data type, use the CREATE TABLE statement and describe the complex column as follows:

CREATE TABLE mytable (

id INT,

data ARRAY<STRUCT<field1 INT, field2 STRING>>

)

To insert data into a table that contains a complex column, use the INSERT INTO statement and specify the data for the complex column as follows:

INSERT INTO mytable (id, data) VALUES (1, [STRUCT(1, ‘abc’), STRUCT(2, ‘def’)])

To query data from a table with a complicated column, use the SELECT statement and access the complex column’s fields using dot notation, as seen below:

SELECT id, data.field1, data.field2 FROM mytable

You can also use array functions such as ARRAY_LENGTH and ARRAY_CONTAINS to manipulate the data in the complex column.

31.How to Azure Active Directory integration and multi-factor authentication ?

Microsoft Azure Active Directory (AD) is a cloud-based identity and access management solution. It allows you to centrally manage access to programmes and resources.

Using multi-factor authentication is one method to improve security with Azure AD (MFA). MFA requires users to give additional authentication beyond their username and password, hence boosting the security of their login. MFA methods supported by Azure AD include phone calls, text messages, and mobile app notifications.

To enable Azure AD integration and MFA, create an Azure account and follow the Azure portal’s Azure AD setup instructions. After you’ve configured Azure AD, you can enable MFA for your users and define which MFA methods they can use.

32.How to authenticate Serverless SQL pool ?

In Azure, there are numerous methods for authenticating a serverless SQL pool. Here are three common approaches:

Authentication using Azure Active Directory (AAD): You can use Azure Active Directory to authenticate users and group memberships for a serverless SQL pool. This is a safe and scalable method of controlling database access.

SQL Authentication: SQL authentication can also be used to connect to a serverless SQL pool. When connecting to the database using this method, you must give a username and password.

Integrated Authentication: You can use integrated authentication to connect to a serverless SQL pool using the current Windows user’s security credentials. For users who are already logged in to a Windows system, this can make the authentication procedure easier.

You must setup the proper connection settings in your client application or tool to use any of these authentication methods.

33.How to access storage account in Serverless SQL pool ?

You must use the Azure SQL Data Sync service to access a storage account in a serverless SQL pool. Here’s how you can do it:

Access the Azure site and sign in.

Enter “SQL data sync” in the search bar and choose the service from the list of results.

Click the “Add Sync Group” button on the SQL Data Sync screen.

Enter a name for your sync group and pick the serverless SQL pool where you wish to synchronize data in the “Add Sync Group” blade that appears.

Choose whether to sync data between the serverless SQL pool and the storage account or to utilize the storage account as a backup for the serverless SQL pool in the “Sync Mode” section.

Select the storage account to use in the “Storage Account” section and specify the container and folder where the synced data should be saved.

To build the sync group and configure the synchronization between the serverless SQL pool and the storage account, click the “Create” button.

After you create the sync group, the data in the serverless SQL pool will be automatically synchronized with the storage account at the intervals you choose. The data in the storage account can then be accessed via the Azure Storage API or by connecting to the storage account using tools such as Azure Storage Explorer.

34.How to access Azure cosmos DB in serverless SQL pool ?

To connect to Azure Cosmos DB from Azure SQL Serverless, you must first construct a SQL-based API for Cosmos DB as a data source, followed by a serverless SQL pool that connects to that API. The steps are as follows:

Navigate to your Azure Cosmos DB account in the Azure portal and pick the “SQL” API.

Click the “Data Explorer” tab, then the “New SQL Query” button.

Enter a SELECT statement in the query editor to retrieve the data from your Cosmos DB container.

By choosing the “Save As” option and entering a name, you may save the query as a stored procedure.

Navigate to the Azure SQL Serverless resource in the Azure portal and select the “Add pool” button.

Give your pool a name and pick the Cosmos DB SQL API as the data source in the “New pool” box.

To construct the serverless SQL pool, click the “Create” button.

After you’ve created the pool, you may use ordinary SQL statements to query the data in your Cosmos DB container via the pool.

35.What is dedicated SQL pool (formerly SQL DW) in Azure Synapse Analytics?

A cloud-based data warehousing service called Dedicated SQL Pool (formerly known as SQL DW) in Azure Synapse Analytics analyses data stored in an Azure Data Lake using a SQL-based programming language. Through the use of a massively parallel processing architecture, it allows the execution of complex queries on enormous datasets and offers quick query performance. You can simply access data in your Azure Data Lake by using dedicated SQL pools, which use a columnar storage model and PolyBase technology to query data in Azure Blob Storage. You can easily create machine learning models using data from your data warehouse by connecting dedicated SQL pool to Azure Machine Learning.

36.How to create a workspace for a dedicated SQL pool ?

To create a workspace in Azure Synapse Analytics (previously SQL DW) for a dedicated SQL pool, follow these steps:

Sign in to the Azure portal using your Azure account.

In the top left corner of the portal, click the “Create a resource” button.

Enter “Azure Synapse Analytics (previously SQL DW)” in the search box and select the result to launch the “Create Azure Synapse Analytics (formerly SQL DW)” blade.

Provide the necessary information to create your workspace on the “Create Azure Synapse Analytics (previously SQL DW)” blade. This includes the following:

Subscription: Choose the Azure subscription for which you want to use the workspace.

Create a new resource group or use one that already exists.

Workspace name: Give your workspace a distinctive name.

Location: Choose the region in which you wish to locate your workspace.

Set up a new SQL pool: To build a new dedicated SQL pool, click the “Yes” button.

SQL pool name: Give your SQL pool a unique name.

The performance level for your SQL pool is selected here.

To review your choices, click the “Review + create” option.

If everything is in order, click the “Create” button to start creating the workspace and the dedicated SQL pool.

It may take a few minutes to build the workspace and SQL pool. You can track the deployment’s progress in the portal’s notifications section.

You can access the workspace and SQL pool via the Azure portal once the deployment is complete. SQL Server Management Studio or any other SQL client tool can also be used to connect to the SQL pool.

37.What is the difference between Azure synapse dedicated SQL pools and dedicated SQL pool in Azure Synapse analytics workspace ?

A dedicated SQL pool (previously SQL DW) in Azure Synapse Analytics is a service that delivers a SQL-based fully managed, petabyte-scale data warehouse solution with the flexibility to pause and resume computation and storage. It is built to handle the most demanding workloads and can scale up or down in seconds.

Azure Synapse dedicated SQL pools, on the other hand, are an unique Azure Synapse Analytics deployment option that delivers entirely isolated and dedicated resources for your data warehouse workloads. You have complete control over the resources that your data warehouse uses with dedicated SQL pools, including the number of compute and storage resources, as well as network and security parameters. This allows you to tailor your responsibilities to the needs of your organization.

In conclusion, an Azure Synapse Analytics dedicated SQL pool is a fully managed data warehouse service, whereas Azure Synapse dedicated SQL pools are a different deployment option that delivers dedicated resources for your data warehouse workloads.

38.Explore the Azure synapse analytics documentation ?

Azure Synapse Analytics (previously SQL Data Warehouse) is a data integration, analytics, and visualization platform hosted in the cloud. It can be used to mix structured and unstructured data from various sources, such as data lakes, data warehouses, and streaming data sources.

The following resources will assist you in getting started with Azure Synapse Analytics:

Azure Synapse Analytics documentation: This is the official Azure Synapse Analytics documentation, and it provides a thorough overview of the platform, including features, pricing, and technical details.

Setting up Azure Synapse Analytics quickly: This guide demonstrates how to instal Azure Synapse Analytics and launch your first project.

Tutorial for Azure Synapse Analytics: In this tutorial, you’ll learn how to set up a workspace, import data, and carry out fundamental data manipulation operations. This tutorial gives you a practical introduction to Azure Synapse Analytics.

Data ingestion, data transformation, and data visualization are just a few of the activities that Azure Synapse Analytics can be used for. This repository offers sample code and scripts that show how to use it to do these tasks.

39.What are the Key components of a big data solution in a dedicated SQL pool ?

The components of a large data solution in a dedicated SQL pool often include:

The sources of the data that will be ingested and stored in the big data solution are referred to as data sources. These data sources can include log files, social media feeds, sensors, and other structured and unstructured data sources.

The process of importing data from data sources into the big data solution is known as data ingestion. Tools like Azure Data Factory, Apache Flume, and Apache Sqoop can be used for this.

Data storage: A distributed file system, such as HDFS (Hadoop Distributed File System) or Azure Data Lake, is used to store the data that has been ingested from the data sources.

Data processing is the process of changing ingested data into a format that can be studied and searched. This can be accomplished with tools such as Apache Spark or Azure Databricks.

Data analysis and querying: Once the data has been processed, it can be examined and queried using tools such as Apache Hive, Apache Impala, or Azure Synapse Analytics (formerly SQL Data Warehouse).

Visualization: The findings of the data analysis can be visualized using tools such as Tableau, Power BI, or Apache Zeppelin.

Data governance: It is critical to have a strategy for managing and administering the data in the big data solution to assure its quality, security, and regulatory compliance.

40.Explore Azure Synapse architecture ?

Azure Synapse (previously SQL Data Warehouse) is a data integration and analytics platform in the cloud that combines data integration, data warehousing, and big data analytics into a single service. It enables you to acquire insights from your data by using a number of data sources, including structured and unstructured data, and the power of Azure Machine Learning.

Azure Synapse’s architecture is made up of three major components:

Compute nodes are the servers that run the SQL Data Warehouse engine and handle queries. You can adjust the number of computing nodes dependent on the demands of your workload.

Storage: Azure Synapse stores data in a columnar format in a proprietary data storage system called SQL DW Gen2. This enables incredibly efficient querying of big databases.

Integration runtime: This is the component that enables Azure Synapse to connect to multiple data sources and perform data integration operations. The integration runtime can be configured to run on-premises or in the cloud.

In addition to these fundamental components, Azure Synapse features a range of tools and services for data integration, data visualization, and machine learning. These include Azure Data Factory, Azure Databricks, and Azure Machine Learning.

41.Describe Transact-SQL features supported in Azure Synapse SQL ?

Azure Synapse SQL (formerly SQL Data Warehouse) is a fully managed cloud data warehouse that works with Azure Machine Learning, Azure Data Factory, and Power BI. Its principal query language is a variation of Transact-SQL (T-SQL), a computer language used for managing and manipulating data in Microsoft SQL Server.

Some of the T-SQL functionalities provided by Azure Synapse SQL are as follows:

Statements such as SELECT, INSERT, UPDATE, DELETE, and MERGE are used to query and alter data in a database table.

Stored procedures are pre-compiled SQL statements that can be performed multiple times. They can take input parameters and return several sets of results.

Functions: While functions and stored procedures are similar, functions only return a single value or a table. Table-valued functions and scalar functions, which return a single value, are both supported by Azure Synapse SQL (return a table).

Triggers are unique stored procedures that are automatically carried out in reaction to specific events, such adding or changing a row in a table.

Cursors: Cursors let you conduct operations on each row of a result set while you iterate over the results one at a time.

A group of SQL statements can be executed as a single unit of work using transactions. The transaction is rolled back entirely if even one of the statements fails.

CTEs: CTEs are temporary result sets that can be utilized within a SELECT, INSERT, UPDATE, DELETE, or CREATE VIEW statement. They are handy for splitting down large requests into smaller, more manageable chunks.

Window functions: Window functions run calculations across a group of rows and produce a single result for each row. They are often used for ranking, aggregation, and running totals.

PIVOT and UNPIVOT: PIVOT and UNPIVOT allow you to rotate and transpose rows into columns and vice versa. They are useful for reporting and data analysis.

JSON functions: You may use JSON functions to parse and query JSON data stored in Azure Synapse SQL.

Dynamic SQL: With dynamic SQL, you can build and execute T-SQL statements on the fly. It’s useful for creating dynamic and adaptable queries.

42.What are the Database objects in Synapse SQL ?

There are numerous types of database objects available in Azure Synapse Analytics (previously SQL Data Warehouse) for storing and managing data:

Tables: In Synapse SQL, tables are the basic unit of data storage. Tables can store structured data in the form of rows and columns and can be queried using SQL.

Views are virtual tables that are created by using a SELECT command. Views can be used to simplify difficult searches or to present data to users in a certain fashion.

Stored Procedures: Stored procedures are SQL statements that have been pre-compiled and can be performed with a single call. Stored procedures can be used to encapsulate complex logic or to boost efficiency by minimising the amount of SQL transmitted to the server.

Functions are reusable pieces of code that take parameters and return a value. In Synapse SQL, there are two sorts of functions: scalar functions, which return a single value, and table-valued functions, which return a set of rows.

Indexes are used to increase query performance by giving a faster way to look up data in a table. Indexes on one or more columns in a table can be created to speed up queries that filter or sort data depending on those columns.

Triggers are specialised sorts of stored procedures that are executed automatically in response to specific events, such as the insertion, deletion, or update of a row in a table. When data is modified, triggers can be used to enforce data integrity or to do further processing.

43.What are the Query languages used in Synapse SQL ?

You can use Transact-SQL (T-SQL) as the query language in Synapse SQL. A standardised programming language called T-SQL is used to manage and work with relational databases. It is a proprietary language created by Microsoft that is frequently used with Azure Synapse Analytics and Microsoft SQL Server (formerly SQL Data Warehouse).

T-SQL is a strong and adaptable language that enables you to carry out a variety of activities, including:

tables, views, and stored procedures can all be created and modified in databases.

database data modification and querying

access control for the database and its objects

Taking care of transactions and errors

There are many different kinds of queries you can run using T-SQL, including:

With SELECT queries, data can be retrieved from one or more tables.

Table data can be changed using INSERT, UPDATE, and DELETE queries.

Queries called CREATE and DROP are used to add or remove database objects.

TRUNCATE queries remove all information from a table.

To change a table’s structure, use INDEX and ALTER queries.

Synapse SQL supports a variety of alternative query languages and technologies in addition to T-SQL, including SQL On-Demand, PolyBase, and Azure Stream Analytics.

44.How to use built in security features in Synapse SQL pool ?

A cloud-based data warehousing service called Azure Synapse SQL pools (formerly known as SQL Data Warehouse) offers SQL-based big data analytics and integration. It combines a SQL data warehouse with a fully managed SQL-based data lake.

You can take the following actions to use the built-in security features in Synapse SQL pools:

Enable authentication by setting up a server-level firewall rule that only permits the IP addresses of your clients to connect to the server. This will enable authentication for your Synapse SQL pool. This will shield your data from illegal access.

Use secure connections: Secure Sockets Layer (SSL) can be used to encrypt communications between your client and the server. Your data will be transmitted securely and you will be protected against man-in-the-middle attacks as a result.

By using row-level security, you may limit which users have access to which table rows. Fine-grained access controls and the protection of sensitive data can both be accomplished with this.

Use Transparent Data Encryption (TDE): TDE is a method for encrypting data that is kept in a Synapse SQL pool. This will aid in preventing unauthorised access to theunauthorizeddata, even if an attacker manages to access the underlying storage.

Use Azure Private Link: You can use Azure Private Link to safely access your Synapse SQL pool over a private network connection. This can assist defend against network-based assaults and guarantee that your data is transmitted safely.

45.What are the various tools used to connect Synapse SQL to query data ?

There are several tools available for connecting to and querying data in Synapse SQL:

SQL Server Management Studio (SSMS): This is a free Microsoft programme that you can use to connect to and query Synapse SQL. It is a robust application that gives a graphical interface for dealing with your data and also supports a wide range of functions such as code completion and formatting.

Visual Studio: If you are a developer, you may use Visual Studio to connect to and query Synapse SQL. Visual Studio is a robust integrated development environment (IDE) that contains a range of tools and features for working with data, including support for connecting to and querying Synapse SQL.

Azure Data Studio is a free, cross-platform application for connecting to and querying Synapse SQL. It is a lightweight program designed for data work, featuring code completion and formatting support, as well as a range of other features.

SQL command line tools: SQL command line tools like sqlcmd and BCP can be used to connect to and query Synapse SQL. These tools enable you to run SQL statements and scripts from the command line, which is useful if you prefer to work this way or need to automate processes.

Programming languages and libraries: If you are a developer, you can connect to and query Synapse SQL using programming languages and libraries such as Python, Java, or.NET. You can connect to Synapse SQL and run SQL commands and queries from your code using a variety of libraries and drivers.

46.Explain various storage data in Synapse SQL ?

Azure Synapse SQL (formerly SQL Data Warehouse) is a cloud-based data warehouse solution that includes data warehouse, big data integration, and real-time analytics capabilities. Data analysis with Azure Machine Learning, Azure Stream Analytics, and Azure Databricks is possible, as is interaction with Azure Monitor, Azure Security Center, and Azure Active Directory.

Data in Synapse SQL can be saved in a variety of ways:

Rowstore: Rowstore is a classic, tabular data storage format that stores data in rows. It is designed for rapid inserts, updates, and deletes and is well-suited for transactional workloads.

Columnstore: Columnstore is a columnar data storage type that stores data in columns rather than rows. It is designed for quick querying and data warehousing tasks, and it can give significant performance benefits over rowstore for queries that scan vast volumes of data.

External tables: External tables allow you to access data saved in external data sources, such as Azure Blob Storage or Azure Data Lake Storage, as if it were a standard table in Synapse SQL. This can be handy for querying enormous datasets that are too large to load into Synapse SQL.

PolyBase: PolyBase is a Synapse SQL feature that allows you to use T-SQL queries to query data stored in external data sources such as Hadoop or Azure Blob Storage. This is excellent for importing data from other sources into your Synapse SQL data warehouse.

In-memory: In-memory is a Synapse SQL feature that allows you to keep tables and indexes in memory rather than on disc. This can significantly enhance performance for real-time analytics and other high-concurrency workloads.

- Explain data formats in Synapse SQL?

Data is saved in tables in Synapse SQL and can be in a variety of formats, including:

CHAR and VARCHAR are fixed and variable length character strings, respectively. Text data is stored in them.

INT: This is a data type for storing integers. It can be signed or unsigned, and the range of possible values is determined by the number of bits used to represent the integer (e.g. 8-bit, 16-bit, 32-bit).

FLOAT and REAL are data types for storing floating-point numbers. FLOAT is a more accurate data type, whereas REAL is smaller and less precise.

DATE and TIME: These data types are used to store dates and times, respectively.

BOOLEAN: This data type is used to store true/false values.

BINARY and VARBINARY: These data types are used to hold binary data, such as images or files. BINARY is a fixed-length data type, whereas VARBINARY is a variable-length data type.

BLOB and CLOB: These data types are used to store vast volumes of binary or character data, respectively.

JSON: This data type is used to hold JSON (JavaScript Object Notation) data. JSON is a lightweight data exchange format that is used to send data between a server and a client.

XML (eXtensible Markup Language): This data type is used to store XML (eXtensible Markup Language) data. XML is a markup language that is used to specify a document’s structure and content.

You can also define custom data types in Synapse SQL by defining a composite type, which is a data type made up of multiple data types. This enables you to design more complicated data structures and arrange your data more effectively.

48 Explain Dedicated SQL pool – Data Warehouse Units (DWUs) and compute Data Warehouse Units (cDWUs) ?

Dedicated SQL pool (formerly SQL Data Warehouse) is a fully managed cloud-based data warehouse solution that leverages Massively Parallel Processing (MPP) to conduct complicated queries across petabytes of data in record time. It is a SQL database service in the Azure SQL family.

In the Dedicated SQL pool, you purchase a certain amount of Data Warehouse Units (DWUs) to establish the service’s performance level. DWUs are a measure of the service’s power, with larger numbers suggesting better performance. You can change the number of DWUs to meet the performance requirements of your workload.

Compute Data Warehouse Units (cDWUs) are a measure of the service’s computing capability, with larger values signifying greater processing power. cDWUs are used in conjunction with DWUs to describe the service’s performance level.

You may fine-tune the performance of your Dedicated SQL pool using both DWUs and cDWUs. Increase the number of DWUs, for example, to improve query performance, or the number of cDWUs to improve data loading performance.

49.How to change data warehouse units in Synapse SQL?

In Azure Synapse, you can change the units (also known as “DTUs” or “Database Transaction Units”) that are allocated to a data warehouse by using the ALTER DATABASE statement. Here’s the syntax:

ALTER DATABASE database_name

MODIFY (EDITION = ‘standard’, SERVICE_OBJECTIVE = ‘DW100’)

Replace database_name with the name of your database, and DW100 with the appropriate database service aim. The number of DTUs allocated to the database is determined by the service aim.

To alter the service purpose of a database called “mydatabase” to DW200, use the following statement:

ALTER DATABASE mydatabase

MODIFY (EDITION = ‘standard’, SERVICE_OBJECTIVE = ‘DW200’)

The Azure Synapse documentation has a list of various service objectives and the quantity of DTUs that they give.

50.How to View current DWU settings in Synapse SQL ?

The following query may be used to view the current DWU settings for a database in Azure Synapse SQL (previously SQL Data Warehouse):

SELECT current_service_objective_name FROM sys.databases WHERE name = ‘database_name’;

Replace database_name with the name of your actual database. This will return the database’s current DWU setting.

You may use the following query to see the DWU settings for all databases on the server:

SELECT name, current_service_objective_name FROM sys.databases;

This will return a list of all databases on the server, as well as their DWU settings.

51.How to Assess the number of data warehouse units ?

In Azure Synapse, you can use the following ways to determine the number of data warehouse units (DWUs) in use:

Use the sys.dm_db _resource_stats DMV. This dynamic management view displays real-time data on database resource usage. To view the current DWU use, run the following query:

SELECT * FROM sys.dm_db_resource_stats;

Make use of the Azure portal. Under the “Monitoring” area of the Azure portal, you can monitor the current DWU usage for your Azure Synapse workspace.

Make use of the Azure Synapse Analytics REST API. You may get the current DWU utilisation for your Azure Synapse workspace using the REST API.

Make use of the Azure Synapse Analytics PowerShell cmdlets. You may get the current DWU usage for your Azure Synapse workspace using PowerShell cmdlets.

Keep in mind that DWU usage can fluctuate based on the workload processed by your Azure Synapse workspace.

52.Explain Service level objective ?

A service level objective (SLO) in Azure Synapse Analytics (previously SQL DW) is a Microsoft commitment to satisfy specific performance targets for your Azure Synapse Analytics workspace. These performance goals are specified in terms of the maximum allowable latency for query processing and data transport processes. When you build an Azure Synapse Analytics workspace, you have the option of selecting one of several SLOs, each of which correlates to a different level of performance and a different price. The higher the SLO, the higher the price and the better the performance.

The SLO can be used to set performance expectations for your Azure Synapse Analytics workspace and verify that you are getting the performance you require for your workloads. You can also use the SLO to monitor your workspace’s performance and uncover any issues that may be hurting the performance of your queries and data transfer operations.

In short, the service level objective (SLO) is a Microsoft performance guarantee for your Azure Synapse Analytics workspace. It establishes performance standards for your workspace and helps you to monitor and optimise the performance of your workloads.

53.Explain Performance Tiers and Data Warehouse Units ?

Performance tiers in Azure Synapse Analytics (previously SQL Data Warehouse) are a mechanism to govern the resources consumed by your data warehouse. The resources comprise the number of data warehouse units (DWUs) assigned to your data warehouse, as well as the quantity of storage and concurrent queries permitted.

There are four performance tiers:

Provisioned DWUs: This is the default tier for Azure Synapse Analytics and allows you to define the number of DWUs assigned to your data warehouse. The more DWUs you have, the faster your data warehouse can run queries. Depending on your workload, you can adjust the number of DWUs as needed.

Serverless DWUs: This tier enables you to autonomously scale the number of DWUs up or down dependent on the demand of your data warehouse. If your workload is unpredictable, this can be a cost-effective solution because you only pay for the DWUs that you utilise.

Data Mart: This tier is intended for lesser workloads and has a storage capacity of 100 DWUs and 1 TB. It’s designed for use cases like testing and developing tiny data marts.

Hyperscale: The highest performance tier, suitable for very large data warehouses with significant concurrency and storage requirements. It enables you to scale up to the maximum number of DWUs and storage capacity that Azure Synapse Analytics has to offer.

Data Warehouse Units (DWUs) are a measure of your data warehouse’s processing power. They are used to indicate your data warehouse’s performance level and are based on a combination of CPU, memory, and I/O resources. The greater the number of DWUs, the faster your data warehouse will be able to run queries. Depending on your workload, you can adjust the number of DWUs up or down as needed.

54.How to change data warehouse units ?

You can alter the number of data warehouse units (DWUs) for a serverless SQL pool in Azure Synapse SQL by using the Azure portal, PowerShell, or the REST API. Here’s how to use the Azure interface to do it:

Sign in to the Azure portal using your Azure account.

Navigate to the Synapse SQL workspace containing the serverless SQL pool whose DWUs you wish to modify.

Select the serverless SQL pool from the Overview page.

Click the Scale & options tab on the serverless SQL pool page.

The current number of DWUs may be found in the Capacity section. Click the Edit button to alter the amount of DWUs.

Select the new number of DWUs from the dropdown list in the Edit capacity blade and click the Save button.

The DWUs for the serverless SQL pool have been updated to the current value. The update may take a few moments to take effect.

55.How to migrate Azure Powershell from AzureRM to Az ?

Follow these steps to move Azure PowerShell from AzureRM to Az:

Install the Az module as follows:

Install-Module -Name Az -AllowClobber

Uninstall the AzureRM module:

Uninstall-Module -Name AzureRM -Force

Install any Az modules you require. To instal the Az.Accounts module, for example:

Install-Module -Name Az.Accounts

If you have scripts that use the AzureRM module, you can update the module names in your scripts with the following command:

Get-ChildItem -Path <path to your script> -Recurse -Include “*.ps1″,”*.psm1″,”*.psd1” | % {

(Get-Content $_.FullName) |

Foreach-Object {

$_ -replace “AzureRM”, “Az”

} |

Set-Content $_.FullName

}

If you have profiles that use the AzureRM module, you can migrate them to the Az module using the following commands:

# Check if you have AzureRM profiles

Get-AzureRmProfile

# Migrate AzureRM profiles to Az

Get-AzureRmProfile | ForEach-Object {

$subscriptionId = $_.Id

$subscriptionName = $_.Name

$tenantId = $_.TenantId

# Create a new Az profile

$newProfile = New-AzProfile -SubscriptionId $subscriptionId -SubscriptionName $subscriptionName -TenantId $tenantId

# Set the new profile as the default

$newProfile | Set-AzContext

}

56.How to view the current DWUsettings with T-SQL ?

By querying the sys.database _service _objectives system view in Synapse SQL, you may see the current DWU (Data Warehouse Units) settings for your server. Each database on the server is represented by a row in this view, and the dwu column displays the current DWU value for each database.

An example query that displays the DWU settings for all databases on the server is as follows:

SELECT name, dwu FROM sys.database_service_objectives;

This will provide a result set with one row for each database, displaying its name and DWU configuration.

If you want to see the DWU settings for a certain database, use the WHERE clause to narrow down the results:

SELECT name, dwu FROM sys.database_service_objectives WHERE name = ‘mydatabase’;

This will yield a result set with a single row containing the DWU setting for the mydatabase db.

57.How to update Database REST API to change the DWUs ?

You can use the REST API’s Update operation to alter the Data Warehouse Units (DWUs) for a database in Azure Synapse Analytics (previously SQL Data Warehouse).

Here’s an example of how to use the REST API to change the DWUs for a database:

Create the API endpoint URL. The API’s starting point is:

https://{serverName}.database.windows.net/{databaseName}?api-version=2015-12-01

Replace {serverName} and {databaseName }with the names of your servers and databases, respectively.

Set the properties parameter in the request body to the DWUs’ desired values. To change the DWUs to 500, for example, use the following request body:

{

“properties”: {

“requestedServiceObjectiveName”: “DW500”

}

}

Use the request body you created in step 2 to send an HTTP PATCH request to the API endpoint.

Here’s an example of how to use cURL to make a PATCH request:

curl -X PATCH \

https://{serverName}.database.windows.net/{databaseName}?api-version=2015-12-01 \

-H ‘Authorization: Bearer {accessToken}’ \

-H ‘Content-Type: application/json’ \

-d ‘{

“properties”: {

“requestedServiceObjectiveName”: “DW500”

}

}’

Replace {serverName} with the name of your server, {databaseName} with the name of your database, and {accessToken} with a valid Azure Synapse Analytics API access token.

58.How to Check status of DWU changes ?

You can utilise the sys.dm_pdw_request_activity dynamic management view in Azure Synapse Analytics (previously SQL Data Warehouse) to examine the status of any modifications to your data warehouse unit (DWU) setup (DMV). This DMV produces a row for each request to the Synapse SQL resource pool, including changes to the DWU settings.

To verify the progress of a DWU change request, use the following query:

SELECT request_id, command, start_time, end_time, percent_complete, status

FROM sys.dm_pdw_request_activity

WHERE command = ‘Alter Warehouse’

AND status = ‘InProgress’

This will return any current requests to modify the warehouse (i.e., change the DWU setup). The percent _complete column will show the percentage of the request that has been finished, and the status column will show the request’s current state (e.g., “InProgress”, “Succeeded”, “Failed”).

You can also use the sys.dm_pdw_waits_fact_history DMV to obtain more specific information about the request’s status, such as how long it has been running and whether any resource waits have happened.

59.Explain Scaling workflow ?

Scaling in Synapse SQL refers to the process of increasing or decreasing the number of resources assigned to a SQL pool. This can be done to adapt to changing workload demands or to maximise resource use and cost.

In Synapse SQL, there are numerous techniques to scale a SQL pool:

Manual scaling entails increasing or decreasing the number of DTUs (Database Transaction Units) or vCore-based resources assigned to the SQL pool. This can be done via the Azure interface, the Azure CLI, or the REST API.

Auto-scaling entails creating rules that automatically scale the SQL pool based on metrics like as DTU or vCore use. Auto-scaling can be configured via the Azure interface, the Azure CLI, or the REST API.

Hybrid scaling is the combination of human and automatic scaling. Set up auto-scaling rules to manage routine scaling demands, and then utilise manual scaling to tackle exceptional or one-time scaling occurrences.

Whatever scaling strategy you use, it is critical to monitor the performance of your SQL pool and alter the scaling settings as needed to ensure that the pool can meet the needs of your business.

60.What are the Capacity limits for dedicated SQL pool in Azure Synapse Analytics ?

Azure Synapse Analytics (previously SQL DW) is a cloud-based data warehouse that can handle datasets in the petabyte range. To store and query data in Synapse Analytics, you can utilise either a serverless SQL pool or a dedicated SQL pool.

The capacity limits for dedicated SQL pools are as follows:

A dedicated SQL pool can have a maximum size of 100 TB.

A dedicated SQL pool can have a maximum of 2,147,483,647 tables.

A table can have a maximum of 1,024 columns.

A non-partitioned table can have a maximum of 1,500 columns.

A partitioned table can have up to 15,000 columns.

Please keep in mind that these restrictions may change as Azure’s service offerings evolve. You can view the most recent capacity limits by visiting the Azure documentation or contacting Azure support.

- Explain Workload management in Synapse SQL ?

Workload management in Synapse SQL entails setting resource limitations and priority for various queries and workloads running on the server. This ensures that the server’s resources are used efficiently and that queries and workloads are processed in a way that fits the organization’s demands.

In Synapse SQL, there are numerous methods for managing workload:

Resource Governor: This feature allows you to categorise workloads and assign them resource restrictions and priorities. You can build resource pools and determine how much CPU, memory, and I/O resources each pool can consume. The workloads can then be assigned to certain resource pools, and Synapse SQL will guarantee that they do not exceed the resource pool restrictions.

Query Store: This feature allows you to monitor and analyse the server’s query and workload performance. The Query Store can be used to identify long-running queries, find queries that are taking too many resources, and address performance issues.

QPI: This feature provides real-time visibility into query performance and assists you in identifying and resolving performance issues in your workloads. QPI allows you to examine individual query performance and find patterns of resource consumption that may indicate a need for optimization.

Automatic tuning: This function analyses and optimises query performance on the server using machine learning. To improve the performance of your workloads, it can automatically build and deploy performance changes like as creating missing indexes or rearranging queries.

62.Explain Database objects in Synapse SQL ?

You can store and manage your data using a variety of database object types in Azure Synapse SQL (formerly known as SQL Data Warehouse):

Tables: In a database, tables serve as the main mechanism for storing data. To store organised data in rows and columns, use tables.

Views: Based on a SELECT command, views are constructed as virtual tables. They display data from one or more tables or views rather than actually storing any data themselves.

Stored procedures: Stored procedures are collections of SQL statements that have been pre-compiled and are ready to run with only one call. They can be used to more effectively carry out routine activities or to encapsulate sophisticated logic.

Functions: Functions are identical to stored procedures, but they return a value. Scalar functions and table-valued functions are the two different categories of functions in Synapse SQL. Table-valued functions yield a collection of rows, while scalar functions return a single result.

Indexes: Indexes are data structures that are used to enhance the performance of SELECT, INSERT, UPDATE, and DELETE queries. They enable you to rapidly locate particular rows in a table depending on the values in one or more columns.

Triggers: Triggers are specialised sorts of stored procedures that are run automatically in response to certain events, such INSERT, UPDATE, or DELETE operations on a table.

Sequences: Sequences are items that produce a series of integers. They can be used to create distinctive IDs or to create a string of integers for inclusion in a SELECT statement.

Synonyms: Synonyms are items that offer a different name for another database object. They can be used to standardise nomenclature across several databases or to make SQL statement syntax simpler.

63.Explain about Polybase Loads?

Transact-SQL (T-SQL) statements can be used to load data from and unload data to Azure Blob storage or Azure Data Lake Store thanks to the PolyBase functionality in SQL Server and Azure SQL Data Warehouse. With PolyBase, you may use external data sources to access and query data as if they were a component of the SQL Server database.

The following two load types are available when using PolyBase to load data from external data sources:

Regular Load: In this sort of load, data is loaded into a table in SQL Server or Azure SQL Data Warehouse from external data sources. Either a blank table can be loaded with data, or an existing table can have data appended to it.

External Table Load: For this sort of load, an external table pointing to an external data source is created in the SQL Server or Azure SQL Data Warehouse database. There is no actual physical loading of the data from the external data source into the database. Instead, you can access the data in the external data source using T-SQL expressions thanks to the external table, which serves as a view on the external data source.

Data from numerous external data sources, including Hadoop, Azure Blob storage, Azure Data Lake Store, and Oracle, can be loaded using PolyBase.

64.Explain Metadata ?

Data that describes other data is referred to as metadata in Synapse SQL. It is employed to offer details regarding the composition, arrangement, and properties of data kept in a database. Names of tables and columns, data types, and restrictions are a few examples of metadata in Synapse SQL.